-

-

-

-

-

-

-

-

-

приложение

и получи

бонус Поддержка

24/7

-

Destiny of Sun & Moon

-

Itero

-

Temple Tumble 2 Dream Drop

-

Sweet Bonanza

-

Razor Shark

-

Iron Bank

-

Jammin' Jars 2

-

Book of Dead

-

Big Bamboo

-

Gladiator Legends

-

Fruit Cocktail

-

Rich Wilde and the Tome of Madness

-

Cyber Wolf

-

Wild Bandito

-

Space Wars

-

Wanted Dead or A Wild

-

Legacy of Dead

-

Midas Golden Touch

-

Dinopolis

-

Crazy Monkey

-

Royal Potato

-

Greta Goes Wild

-

Mental

-

Eye of Cleopatra

-

Extra Chilli

-

The Dog House Megaways

-

Misery Mining

-

Odin’s Gamble

-

Money Train 2

-

Hand of Anubis

-

Tiger Kingdom

-

Gates of Olympus

-

Beast Mode

-

Coin Quest

-

Drunken Sailors

-

Das xBoot

-

Minotaurus

-

Bison Battle

-

Fire Hopper

-

Dead or Alive 2 Feature Buy

Вавада казино | Вавада регистрация

Надежное азартное заведение — это не одностраничный сайт с безграмотно размещенными вкладками и минимумом игр. Это удобный, хорошо оформленный, безопасный ресурс, работающий легально согласно лицензии. Всем этим требованиям соответствует Вавада казино. Vavada ведет честную деятельность на протяжении шести лет и за это время ни разу не запятнал свою репутацию. В отзывах Vavada casino хвалят за разнообразие развлечений, хорошую клиентоориентированность и своевременные выплаты. Самое время познакомиться с официальным сайтом Вавада поближе и оценить все его преимущества.

| 🕹️ Игровая платформа | Вавада |

| 🎯Дата открытия | 13.10.2017 |

| 🎰 Топовые провайдеры | NetEnt, Igrosoft, Novomatic, Betsoft, EGT, Evolution Gaming, Thunderkick, Microgaming, Quickspin |

| 🃏Тип казино | Браузерная, мобильная, live-версии |

| 🍋Операционная система | Android, iOS, Windows |

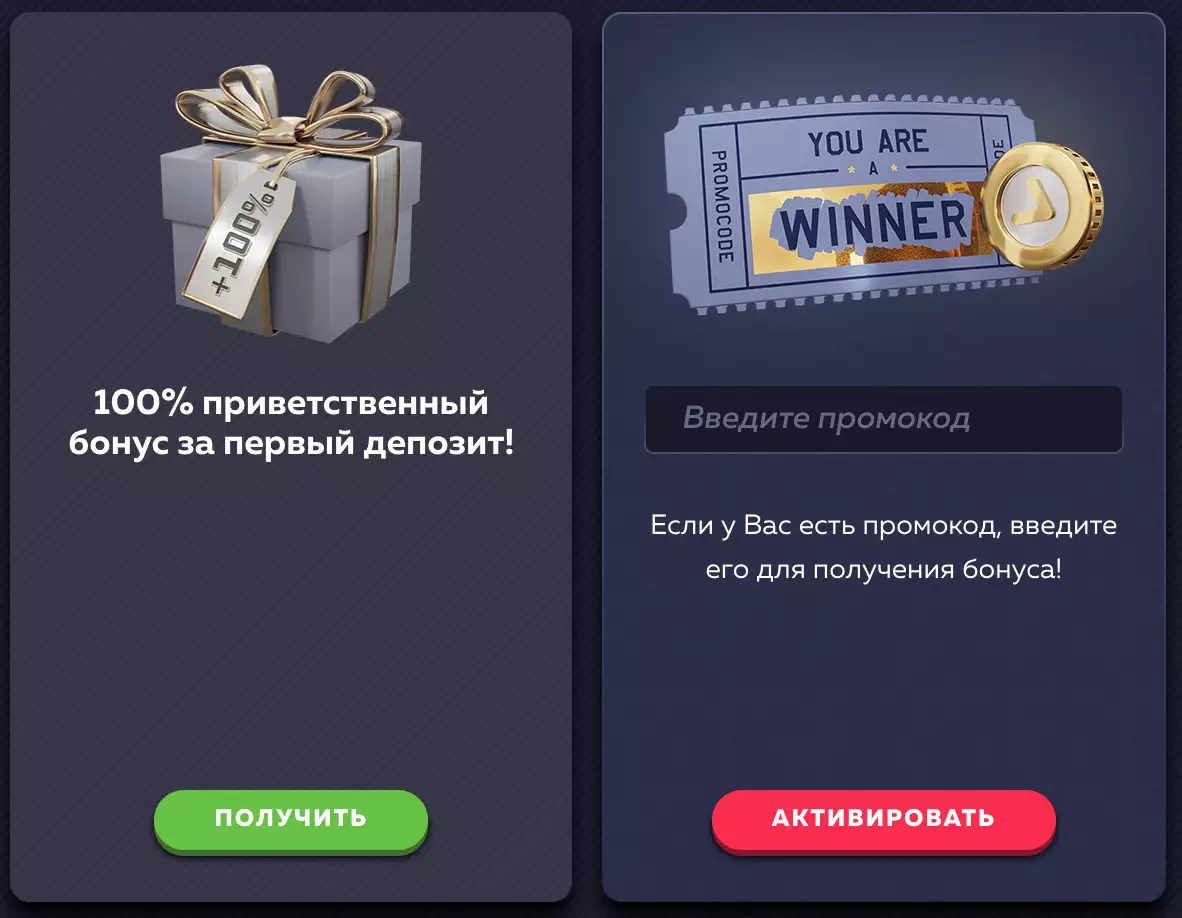

| 💎Приветственные бонусы | 100 фриспинов + 100% к первому депозиту |

| ⚡Способы регистрации | Через email, телефон, соц. сети |

| 💲Игровые валюты | Рубли, евро, доллары, гривны |

| 💱Минимальная сумма депозита | 50 рублей |

| 💹Минимальная сумма выплаты | 1000 рублей |

| 💳Платёжные инструменты | Visa/MasterCard, SMS, Moneta.ru, Webmoney, Neteller, Skrill |

| 💸Поддерживаемый язык | Русский |

| ☝Круглосуточная служба поддержки | Email, live-чат, телефон |

Вавада казино: краткая информация

Для начала предлагаем взглянуть на таблицу ниже и изучить главную информацию о казино Vavada.

| Запуск | Октябрь 2017 года |

| Создатель | Макс Блэк |

| Управляющая организация | Vavada B.V |

| Основания для предоставления азартных услуг | Лицензия Curacao |

| Возрастные требования | 18+ |

| Языки сайта | Английский, русский, португальский, французский, испанский, болгарский, казахский и другие |

| Поддерживаемые валюты | UAH, RUB, USD, EUR, CAD, PLN, BRL |

| Минимальное пополнение | 50₽ или 1$ |

| Минимальный кэшаут | 1000₽ или 20$ |

| Системы проведения платежей | Банковские карты (Visa, Mastercard), электронные кошельки (WebMoney, Neteller, Yandex Money, Skrill, Piastrix, VouWallet, MuchBetter), Биржи (Binance), криптовалютные счета (Bitcoin, Ethereum, Litecoin, Tether ERC-20) |

| Срок вывода | до 24 часов |

Что интересно, онлайн казино Вавада было основано геймером-любителем. Макс поставил перед собой цель показать гемблерам совершенно новое качество, и у него это получилось. На сегодня Vavada Casino нет равных среди других площадок в СНГ, а в мире оно занимает лидирующие позиции по всех рейтингах.

Оформление и навигация на Вавада

Первое, что производит впечатление на посетителя — внешний вид официального сайта Вавада. Дизайн выполнен в темных оттенках с акцентами на неоновых надписях и рисунках в желтом, зеленом, красном и синем цветах. Брендовый логотип малиновый, подсвечивается в углу слева, его невозможно не заметить.

В центре экрана расположен огромный баннер Vavada casino. На нем отображаются актуальные размеры разных видов джекпотов, информация о турнирах и приветственном наборе вознаграждений. Баннер Вавада некликабельный, с ним можно только ознакомиться и затем самостоятельно перейти в нужный раздел для подробного ознакомления с предложением.

Под баннером пестрят своими красочными иконками игровые автоматы Вавада казино. Вверху размещены самые популярные и новые, с высоким уровнем отдачи. Сбоку окошко поиска игр по названию или разработчику, отдельные фильтры для опытных посетителей, которые знают, что хотят найти в ассортименте.

После списка слотов, в футере вавада официальный сайт представлена особенно важная информация для всех новоприбывших:

- Ответы на часто задаваемые вопросы.

- Кнопка связи с администрацией Vavada.

- Полный список платежных методов.

- Копия лицензионного соглашения.

- Ссылки на социальные сети: Инстаграм, Телеграм, Вконтакте YouTube.

- Положения об ответственной игре.

Рекомендуем ознакомиться со всеми разделами казино Вавада, особенно запомнить правила поведения на платформе, права и обязанности. Часто незнающие новички обвиняют администрацию Vavada в отказе возврата потраченных средств. Мало кто знает, что решение сделать ставку является окончательным и бесповоротным. Даже если ход сделан случайно, доказать это не получится, средства не вернут. Это одна из причин, почему необходимо нести ответственность за свои действия и обдуманно ставить на деньги.

Важно сказать, что управляющие Вавада не принуждают клиентов играть платно. Если хотите попробовать бесплатный формат без рисков, выберите демо при запуске автомата. Это отличный способ изучить специфику развлечения, посмотреть на отдачу и принять решение о дальнейших ходах в реальной валюте.

Если почувствуете зависимость от азартных игр, пройдите бесплатный тест, который предлагает администрация Vavada. В Вавада зеркало не приветствуется нездоровое отношение к казино, в любой момент вы можете отказаться от игры и деактивировать учетную запись.

Рабочее зеркало Вавада: что это, как работает и как его найти

Граждане из России все чаще встречают уведомление о том, что сайт Vavada com недоступен по юридическим причинам. Даже если он отображается в поисковой системе, зайти в кабинет и воспользоваться функциями ресурса не получится. Это указывает на блокировку Роскомнадзором. Организация руководствуется законодательством РФ и отключает доступ к казино Vavada как к нелегальному сайту. Но и такие меры не останавливают гемблеров. У разработчиков всегда в запасе несколько ссылок на рабочие зеркала Вавада. Запасные домены Vavada casino работают бесперебойно в момент блокировки и предоставляют посетителям полный доступ к функционалу: открытию счета, пополнению, запуску любых аппаратов из ассортимента, общению со службой помощи.

Для входа на зеркало Vavada заранее создаются ссылки. Безопасный способ их получить — обратиться к сотрудникам клуба в социальных сетях. Игрокам со статусом ВИП в этом плане крупно повезло. Они могут написать персональному помощнику в удобном мессенджере и запросить полный список зеркал Вавада.

Если вы решили найти запасной домен Vavada casino самостоятельно, используйте только официальные ресурсы и форумы с многомиллионной аудиторией. Найдите нужное обсуждение и спросите у реальных игроков, какие зеркала Вавада сейчас актуальны. Кто-то точно откликнется и вышлет ссылку.

Что делать дальше:

- Перейдите по ссылке.

- В правой части экрана кликните на кнопку входа.

- Введите логин и пароль от аккаунта.

- Подтвердите авторизацию кнопкой внизу.

Когда учетная запись Вавада загрузится, переходите в ассортимент и выбирайте игру по вкусу. На зеркале сохранен полный список развлечений, также открыты бесплатный формат и все популярные категории. Также на зеркалах поддерживается регистрация, данных будут доступны на всех доменах Вавада.

Если ссылка перестала работать, загрузите другую. Когда официальный сайт Vavada будет восстановлен, вы сможете перейти на него и продолжить делать ставки.

Рабочее зеркало Вавада - Список:

Регистрация: пошаговая инструкция

Начнем с того, кто может регистрироваться в вавада казино:

- Те, кому исполнилось 18 лет. Регистрация несовершеннолетним запрещена, в противном случае им грозит перманентная блокировка аккаунта. Служба безопасности внимательно отслеживает подозрительную активность и при необходимости запрашивает фото паспорта для сверки данных. Если возраст в анкете не совпадает с указанным в официальном документе, клиента ждут неприятные разбирательства.

- Те, кто регистрирует профиль Вавада впервые. Регистрация нескольких учетных записей на одно имя считается мошенничеством и нарушением политики заведения. Ранее такой ход применяли для получения большего количества бонусов, поэтому управляющие приняли меры и запретили повторно создавать страницы.

Если вы совершеннолетний геймер-любитель, который только знакомится с официальным сайтом Вавада, тогда добро пожаловать. Чтобы присоединиться к дружественному сообществу геймеров, найдите регистрационный баннер на главной странице сайта Vavada и кликните на него для открытия анкеты. В появившемся окне "регистрация" введите адрес электронной почты или номер телефона, который планируете использовать в качестве логина. Далее введите надежный пароль, позже защиту аккаунта можно будет улучшить с помощью двухэтапной аутентификации. В самом низу анкеты найдите приписку с политикой сообщества и поставьте галочку, выразив свое согласие и готовность соблюдать правила казино.

После регистрации на ваваде не спешите переходить к ставкам. Сначала подтвердите e-mail в кабинете. Письмо со ссылкой для подтверждения придет в течение пары минут. Перейдите по ней и вы автоматически окажетесь в своем профиле.

Настройка личного кабинета Вавада

Кабинет Vavada — особый раздел для контроля над всеми функциями аккаунта. Он разделен на 5 основных вкладок:

- Профиль — страница с анкетой клиента. Помимо личных данных, здесь размещены окошки верификации, смены пароля и двухэтапной аутентификации.

- Кошелек — вкладка для проведения любых транзакций: пополнений, выводов, переводов между счетами. Внизу страницы отображается активная валюта. Ее можно поменять на другую. Одновременно доступно управление не более, чем четырьмя кошельками.

- Бонусы — список вознаграждений, выданных клиенту от Vavada Casino. Если рядом с названием поощрения идет обратный отсчет, значит оно уже активировано и ожидает использования. В нижней части раздела вы найдете окно для ввода промокода и советы по получению большего количества вознаграждений.

- Статусы — подробная информация о положении клиента в программе лояльности. В зеленой рамке подсвечивается актуальный статус, крупным шрифтом указано, сколько еще средств необходимо внести для повышения.

- Сообщения — стандартная рассылка от заведения и оповещения о важных событиях. Если после прочтения сообщения останутся вопросы, в разделе есть кнопка вызова чата с поддержкой.

Чтобы быстро просмотреть разделы кабинета Вавада зеркало, достаточно навести курсор на свой никнейм. В шторке появятся все вкладки, в самом низу будет кнопка выхода из учетной записи.

Вход в Vavada: основные способы

Без регистрации в игровом зале Вавада не получится воспользоваться простейшими функциями: пополнить баланс, запустить игру на деньги или вывести выигрыш к себе на карту. Если хотите полноценно играть и получать дополнительные бонусы, выполните вход в аккаунт одним из способов:

- Через логин и пароль — после клика на кнопку входа достаточно ввести в полях данные, которые вы придумывали в момент регистрации. Если войти не получается, проверьте правильность логина и пароля, при необходимости восстановите доступ к аккаунту через электронную почту.

- Через социальные сети — быстрый способ перенести данные с Facebook, Google, Twitter, Instagram и других уже существующих страниц. При клике на иконку соцсети откроется маленькое окно, понадобится пройти стандартную авторизацию на выбранном сайте. После произойдет обновление официального сайта Вавада и вы автоматически окажетесь в профиле.

Если на странице появляется ошибка, значит возникли проблемы с доменом. Найдите актуальное зеркало и повторите все действия на нем.

Лучшие слоты в казино вавада

| 🔥 Бездепозитный бонус: | 100 фриспинов |

| 💻 Официальный сайт: | vavada.com |

| 🎲 Тип казино: | Слоты, Столы, Live, Турниры |

| 🗓 Рабочее зеркало: | Есть |

Игровые автоматы Vavada: все категории

Игротека Вавада зеркало считается одной из лучших в СНГ и мире по двум причинам:

- Разнообразие развлечений — в списке более 5000 игр разного жанра и формата, и это количество постоянно растет. Пополнения в ассортименте происходят пару раз в неделю или каждые два дня. Новинки всегда отображаются первыми в списке и подсвечиваются специальным тегом, их невозможно не заметить.

- Лицензированные разработчики — Вавада сотрудничает с такими компаниями как Yggdrasil, Turbo, True Lab, Tom Horn, Thunderkick, Three Oaks, Spribe, Slotmill, Red Tiger, QuickSpin, Push Gaming, Print Studios, Pragmatic Play, Playtech, PG Soft, Nolimit City, NetEnt, Calamba, Igrosoft, Hananero, Gamomat, Evolution и это далеко не весь список. Общее количество поставщиков давно превысило 50 компаний. Все они известны во всем мире, честны перед своими клиентам и предлагают качественный, надежный софт с гарантией честности. Запуск автомата всегда происходит на отдельном сервере, чтобы у сотрудников казино и гемблеров не было шанса взломать развлечение и получить нечестный выигрыш.

Среди обычных категорий выделяются слоты для отыгрыша бонусов Вавада. Они создаются в эксклюзивном порядке для прокрута бесплатных вращений и других подарочных единиц. Эти развлечения всегда с высоким качеством прорисовки, большим количеством дополнительных уровней и возможностей выигрыша.

Что касается жанров в развлечениях Вавада, многотысячный ассортимент разделен на 4 основных лобби. Рассмотрим их подробнее.

Online Слоты

Самая большая категория аппаратов Vavada с интересными тематиками и простыми правилами. Здесь вы найдете игровые барабаны с животными, фруктами, бандитами, путешественниками, принцами, грабителями, ковбоями, древнеегипетскими и древнегреческими существами, Богами, алмазами, обезьянками и многое другое. В списке слоты казино вавада представлены по мере уменьшения посещаемости. Чтобы игроку было проще найти развлечение, вверху категории используйте поиск по провайдеру и основным тегам:

- Hit — отображаются как флажок с фиолетовым цветом. Это лучшие автоматы на сегодня: Sweet Bonanza, The Dog House, Rich Wilde and the Tome of Madness, Great Pigsby Megaways, Minotaurus, Twin Spin XXXtreme, Crazy Monkey, Blood & Shadow, Royal Potato, Folsom Prison. Онлайн здесь достигает нескольких тысяч, все одновременно ставят и получают хорошие заносы.

- New — выделены флажком красного цвета. Это только что добавленные в игротеку развлечения, от топовых поставщиков. Среди последних обновлений: Dr. Rock & the Riff Reactor, Coba Reborn, Wild Turkey Megaways, Scroll of Seth, Starfire Fortunes TopHit, Jack and the Beanstalk Remastered, Shinobi Spirit, Vending Machine, Bounty Hunters.

- Pre — эксклюзивный раздел Вавада с еще не вышедшими релизами. Гемблерам предлагается опробовать часть игры еще до ее выхода и оценить качество в числе первых.

Рядом со всеми стандартными тегами расположен еще один флажок, внутри которого нарисован значок сердца. Эту категорию каждый гемблер Вавада создает сам для себя, добавляя в избранное понравившиеся позиции. Чтобы не искать их в будущем, достаточно перейти в собственную библиотеку и выбрать игру оттуда.

Ставки в слотах Vavada начинаются от пары копеек и достигают сотен долларов. Минимальный порог отличается в зависимости от развлечения.

Live-игры

Раздел с живыми трансляциями, который представляют 4 лучших разработчика:

- Evolution — латвийская студия, которая известна высокой надежностью и качеством предлагаемых лайв-симуляторов. В лобби казино вавада представлены Double Ball Roulette, Ruletka Live, Funky Time, Bac Bo, Peek Baccarat, Craps, Infinite Free Bet Blackjack. Одна игра может проводиться в нескольких трансляциях, с разным размером минимальной ставки и языком общения. Все студии красочные, интересные, что превращает обычные ставки в настоящее шоу.

- Pragmatic Play — разработчик из Мальты и Гибралтара. Его основное преимущество — ориентированность на мобильные устройства. Посетить трансляцию получится не только с ПК, но и с любого смартфона. Развлечения увлекательные: Vegas Ball Bonanza, Power Up Roulette, Драконы и Тигры, Быстрый Блэкджек и Мега Рулетка.

- Playtech — компания с огромной историей, созданная в далеком 1999 году в Израиле. Занимается программным обеспечением только лучших азартных заведений. Среди популярных игр — Age of the Gods, The Gladiator, Robocop с минимальными ставками в один цент.

- Betgames — провайдер с нестандартным видением онлайн трансляций. Львиная доля игр представлена картами и лотереями. Есть Горячая семерка, Андар Бахар, Битва Ставок, Колесо Фортуны, Дуэль Костей.

В каждой трансляции участников встречает настоящий ведущий. Он рассказывает правила игры и веселит соперников. Все действия происходят на камеру, поэтому подделать результат невозможно. Для общения между участниками и ведущим предлагается чат в углу экрана. Для игры в live нужна регистрация.

Столы Вавада

Интересный раздел для тех, кто увлекается карточными развлечениями и рулеткой. Столы Vavada online поделены на подкатегории по аналогии со слотами. В списке популярных отображаются Aviator, Cash Plane X5000, First Person Roulette, Crash X, Mines, First Person Blackjack, Deep Rush, Spaceman, Space XY, Fruit Towers, RNG First Person Mega Ball, Joker Poker. Есть и новинки: LInes, Take my Plinko, Dogs' Street, Save the Princess.

Демонстрационный режим работает с виртуальной валютой Вавада. Счет бесконечный, выигрыши определяются рандомными числами. Когда виртуальный баланс приблизится к нулю, достаточно обновить развлечение и снова играть без рисков.

Бонусы Vavada на сегодня

Система поощрений на площадке Вавада ориентирована на активных игроков. Администрация поощряет клиентов за малейшие действия, даже если это лайк или комментарий в социальной сети.

У новичков на Вавада особые привилегии — в качестве приветствия им выплачивается приветственный пакет бонусов, который призван увеличить прибыль с самого старта без особых вложений. Частота вознаграждения постояльцев зависит от их заинтересованности. Чем больше активность в автоматах, тем выше шанс получить дополнительный буст и повысить баланс.

Обязательным для всех является отыгрыш бонуса. Администрация установила вейджер на каждое поощрение, чтобы обеспечить честный вывод подарочных денег. Без полного прокрута полученной прибыли с помощью реальной валюты не получится вывести выигрыш на карту.

Что касается разновидностей бонусов, сейчас на платформе регулярно проводятся 4 интересных акции.

100 бесплатных вращений

Первый бонус для зарегистрированных на Вавада, который не требует вложений и дополнительных действий. После первого входа в новосозданный профиль 100 фриспинов будут ждать вас в кабинете. Перейдите к списку бонусов, чтобы его активировать. После нажатия кнопка увидите обратный отсчет. Это время, за которое нужно полностью использовать и отыграть спины. На игру дается 14 дней. По истечению срока бонус полностью аннулируется и становится недоступным.

Поставить вращения можно только в одном симуляторе Vavada — Great Pigsby Megaways. Он был специально разработан для отыгрыша. Интересный формат в виде свинки-копилки с большим количеством дополнительных уровней точно понравится новичкам и профессионалам.

Чтобы насладиться процессом, советуем включить автоматический прокрут ставок и просто наблюдать. Когда сотня спинов закончится, на экране появится выигрыш. Его необходимо отыграть с вейджером х20 в любом понравившемся симуляторе Вавада. Например, если победные средства составляют 20 долларов, для беспрепятственного вывода вознаграждения необходимо суммарно поставить 400 долларов.

Множитель депозита

Еще одно предложение от Вавада из разряда приветственных. Поскольку для отыгрыша бездепа все равно понадобится сделать пополнение, администрация решила вознаградить новичков и за него. При пополнении счета Vavada на сумму от 1$ до 1000$ активируется удвоение депозита. Как это работает:

- Обычная сумма пополнения остается на основном балансе.

- Множитель отображается на дополнительном счету и растрачивается в приоритетном порядке.

Чтобы начать тратить деньги, перейдите в любой автомат Вавада. Размер ставки выбирайте на свое усмотрение. Из множителя формируется отдельная прибыль. Как и фриспины, ее потребуется отыграть. Вейджер немного больше — х35, но это не мешает всем игрокам успешно с ним справляться и выводить победные средства в этот же день.

Множитель пополнения выдается один раз, поэтому рекомендуем воспользоваться данным предложением.

Кэшбэк

На официальном сайте Вавада казино каждой детали уделяется огромное внимание. Результаты ставок не уходят в никуда, из них по итогам месяца формируется индивидуальная статистика для каждого клиента. Возможны два исхода:

- Победы преобладают над поражениями, игрок вышел в плюс.

- Сумма затрат больше, чем выигрышей.

Конечно, все стремятся обыграть заведение Vavada, но рандомный исход ставок не всегда это позволяет. Даже если вы потерпели убытки, не стоит расстраиваться. Клуб вернет 10% от затрат в текущем месяце в качестве кэшбэка. Деньги приходят автоматически в первых числах следующего месяца, поэтому периодически заглядывайте в список бонусов.

Когда кэшбэк Вавада начислят, активируйте его и потратьте деньги в слотах. Прибыль отыграйте по вейджеру х5 и выводите без ограничений.

Промокоды

Интересный способ получить больше спинов, фишек и кэша на Вавада. Количество промокодов неограничено, их зачисление зависит от вашей активности. Вот лишь краткий список действий, за которые выдают бонус коды Vavada сегодня:

- Внесение депозитов в любой сумме.

- Активные траты в развлечениях.

- Подписка на почтовую рассылку.

- Комментарии и лайки в социальных сетях заведения.

- День Рождения.

- Повышение статуса.

- Активность на стримах партнеров.

Простой способ узнать о наличии промокода для вас — обратиться в техническую службу Вавада. Особенно это работает, если не за горами День Рождения. На именины никто не остается без подарка. Чтобы его получить, прикрепите в чате фотографию паспорта с подтверждением даты и ждите начисления презента.

Программа лояльности: какие статусы есть на Вавада

Вместе с бонусами на площадке действует система рангов. Чем выше уровень в программе лояльности, тем больше привилегий и функций на сайта открывается гемблеру.

Первый уровень начисляется всем автоматически. Его название — Новичок. Клиентам Вавада с таким статусом не обязательно пополнять счет, чтобы подтвердить свое звание. В то же время, с таким рангом дается минимальное количество привилегий. Практически все турниры закрыты для участия, а дополнительные поощрения отсутствуют.

Чтобы повыситься на одну ступень, необходимо за месяц внести на счет Вавада от 15$. Тогда будет автоматически присвоен уровень Игрок. Дальнейшие повышения определяются количеством вложений:

- Бронзовый — 250$.

- Серебряный — 4000$.

- Золотой — 8000$.

- Платиновый — 50000$.

В качестве примера сумма указана в долларах. Если в профиле Вавада основной валютой выбрана другая, сумма будет отображена с учетом конвертации. Платиновым повезло больше всего. За достижения им присваивается собственный менеджер, который полностью заменяет службу поддержки. К помощнику Вавада можно обратиться в любое время дня и ночи.

Тем, кто не хочет тратить время на пошаговое продвижение, достаточно внести деньги одним платежом. Учитывайте, что все уровни от Игрока до Платины требуют ежемесячного подтверждения. Например, для Золотого статуса Вавада необходимо каждый месяц вносить депозиты в сумме 8000$, иначе произойдет понижение. При полном отсутствии активности за 30 дней вновь активируется новичок, все полученные бенефиты теряются.

Турниры Vavada: виды и особенности

Турниры — четвертая категория в ассортименте Вавада. От других она отличается возможностью игры в реальном времени с настоящими соперниками. Ежедневно в соревнованиях принимают участие тысячи, а то и сотни тысяч гемблеров. Они сражаются в борьбе за крупный приз. Подарки действительно щедрые, даже новички могут унести с собой минимум 100$ прибыли. Интересно и то, что отыгрывать прибыль не нужно. Деньги сразу выводятся на карту.

Всего на официальном сайте Вавада три разновидности турниров. У каждого из них свои правила, требования, призовой фонд. Рассмотрим каждый в таблице ниже.

| Название | Кто участвует | Аппараты для ставок | Правила | Призовой фонд |

| Х-Турнир | Все ранги: от новичка до платины | Bounty Hunters, Dead Canary, Bison Battle, Mental, Fire Hopper, Fruit Party | Делать ставки от 15 центов в любых симуляторах и получать за это заносы | от 50000$ до 65000$ |

| Кэш-Турнир Вавада | От бронзового и выше | Fruit Duel, Forest Fortune, Gladiator Legends, Born WILD, Hand of Anubis, Itero, Mayan Stackways, Outlaws Inc | Ходить виртуальными фишками и получать за это реальные деньги. Попытки разрешается докупать не более двух раз по цене 6$ | 25000$ |

| На фриспины | Випы: Серебро, Золото, Платина | Rocket Reels, Stack 'em, Tasty Treats, Toshi Video Club, Xpander, Miami Multiplier, Mystery Motel, The Respinners, King Carrot | Потратить 100 бесплатных вращений на ходы в одном автомате. Если попытки закончились, можно докупить дополнительные | от 20000$ до 25000$ |

В категории турниров Vavada также есть страница с джекпотами. Выигрыши в космических суммах может получить любой, главное — проявить настойчивость. Все джекпоты Вавада разделены на три категории: Мега, Мажор и Минор. Минимальный размер выигрыша 53,72$, а максимальный — 383712,91$. О слотах, в которых можно выиграть приз, поддержка или администрация уведомляют заранее. Чтобы не пропустить оповещение, проверьте подписку на почтовую рассылку и социальные сети.

Мобильное казино: версии для браузеров и приложения

Вавада казино предлагает два способа входа в заведение через портативные устройства.

Мобильная версия казино Вавада

Открывается через браузер смартфона или планшета. Можно использовать стандартное приложение, например, Safari на IPhone, или любой предустановленный браузер из маркета: Opera, Google Chrome. В поисковой системе достаточно найти главный сайт клуба и открыть его по ссылке. Вход со смартфона распознается автоматически, картинка сразу подстроится под габариты дисплея.

Авторизация на мобильной версии Вавада выполняется привычным способом, через логин и пароль. после входа в личный кабинет отображается вся статистика: текущий баланс, ранг, неиспользованные поощрения. Сохранен чат с поддержкой и история общения.

В мобильном казино Вавада отображаются не все симуляторы, некоторые из них еще не адаптированы под маленькие экраны. Это не помеха, ведь в списке вы все равно найдете хитовые Let It Burn, Wild West Gold, The Hand of Midas, Pyramyth и другие.

Приложение Vavada на Android

Это загружаемый софт для смартфонов и планшетов. Чтобы скачать официальное приложение Vavada:

- Попросите ссылку для загрузки в чате у саппорта.

- Перейдите по ней и начните скачивание.

- Установите apk файл в список приложений.

- Запустите иконку с рабочего стола.

- Войдите в аккаунт и играйте без ограничений.

Если во время установки на экране появится предупреждение о неизвестном источнике, просто подтвердите надежность разработчика нажатием галочки. За безопасность личных файлов можете не переживать. Официальная программа Вавада проверена на вирусы, регулярно проходит обновления и соответствует всем требованиям надежности. Взломать аккаунт через приложение не получится, поскольку здесь используется двойное SSL-шифрование.

В отличие от мобильной версии, программа позволяет запускать демонстрационный режим офлайн, так что вы сможете развлечься за любимыми слотами Vavada даже во время путешествия. Если официальный сайт блокируется, на программу это никак не влияет. Приложение автоматически подбирает рабочие зеркала Vavada без вмешательства пользователя.

Пополнение и вывод Вавада

Все операции со счетом выполняются в кошельке. Чтобы совершить первый депозит на Vavada, нужно перейти в эту вкладку, выбрать способ пополнения: карту банка, электронный кошелек или криптовалюту, вписать сумму не меньше 1$ и отправить заявку. Подтвердить транзакцию понадобится в приложении своего банка. Если во время совершения депозита возникла ошибка, обновите страницу и попробуйте снова. Учитывайте, что валюта карты или счета должна соответствовать выбранной валюте пополнения, иначе платеж не пройдет.

Вывод выигрыша проводится по такому же принципу, но есть несколько важных требований:

- Минимальная сумма кэшаута — 20$.

- Реквизиты должны быть именными и соответствовать способу, который был использован для пополнения.

- Срок ожидания — до 24 часов.

При оформлении кэшаута на Вавада соблюдение отыгрыша и правил клуба проверяются вручную, по этой причине установлены такие временные промежутки. Если служба безопасности обнаружит признаки мошенничества или неполный отыгрыш, вывод может затянуться до выяснения обстоятельств.

Верификация Вавада

Все платежи разрешается совершать анонимно, если их сумма не превышает 1000$. Тем, кто планирует ставить и выводить по-крупному, потребуется подтвердить личность с помощью паспорта или водительского удостоверения.

Как это сделать:

- Зайдите в кабинет Вавада, вкладка Профиль.

- Пролистайте вниз, пока не увидите окошко верификации.

- Нажмите на скрепку, чтобы прикрепить документ.

Далее просто ждите, пока сотрудники выполнят проверку. Обычно это занимает не больше получаса.

Верификация на Вавада дается один раз, после чего с пользователя снимаются лимиты на вывод в сутки, неделю и месяц.

Служба поддержки

Связаться с саппортом Vavada можно несколькими способами:

- в чате на сайте,

- через скайп

- и по мобильному номеру.

Чтобы открыть чат на Вавада, найдите на странице знак вопроса (выделен желтым цветом по контуру, обычно находится в верхней панели) и кликните на него. Внизу сразу откроется диалог с оператором. Отправьте шаблонное сообщение из разряда «Мне нужна помощь» и ожидайте, пока оператор подключится к диалогу. В среднем это занимает 5-20 минут. Далее коротко расскажите о своей проблеме, чтобы сотрудник предложил возможные пути решение.

Главное преимущество технической службы Вавада — индивидуальный подход. Обратившись в чат, вы можете быть уверены, что ваш вопрос решится в ближайшее время. В отзывах саппорт хвалят и за оперативность. Все сотрудники отвечают быстро, вежливы в общении и никогда не отключаются, пока пользователь не подтвердит устранение проблемы.

-

Дина

Свит Бонанза — одна из моих любимых игр. Бывает залипаю на пару часов.

-

Марина

Не хватает раздела со ставками на спорт и было бы идеальное казино.

-

Татьяна

Решила не рисковать и начать с Демо. Скажу, что очень интересно наблюдать за игрой, даже если не получаешь за это деньги.

-

Руслан

В прошлом месяце присоединился к партнерской программе. И теперь стабильно зарабатываю от сотни баксов и доволен своим решением.

-

Костя

Жду ответа от поддержки уже сутки. Что это за сервис такой?